Kita akan menggunakan edge detection OpenCV pada webcam yang real time. Tentu saja ini bisa diaplikasikan ke IP camera juga. Kita akan membuat script menampilkan tampilan webcam tanpa edge detection dan setelah itu kita akan mengubah sehingga menampilkan edge detection secara real time.

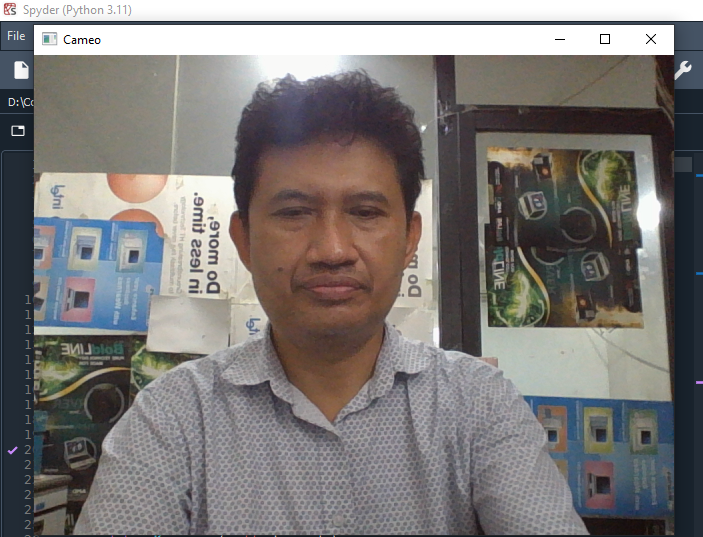

Tampilan webcam tanpa realtime edge detection adalah seperti pada berikut ini

- Source code managers.py

import cv2 import numpy import time class CaptureManager(object): def __init__(self, capture, previewWindowManager = None, shouldMirrorPreview = False): self.previewWindowManager = previewWindowManager self.shouldMirrorPreview = shouldMirrorPreview self._capture = capture self._channel = 0 self._enteredFrame = False self._frame = None self._imageFilename = None self._videoFilename = None self._videoEncoding = None self._videoWriter = None self._startTime = None self._framesElapsed = 0 self._fpsEstimate = None @property def channel(self): return self._channel @channel.setter def channel(self, value): if self._channel != value: self._channel = value self._frame = None @property def frame(self): if self._enteredFrame and self._frame is None: _, self._frame = self._capture.retrieve( self._frame, self.channel) return self._frame @property def isWritingImage(self): return self._imageFilename is not None @property def isWritingVideo(self): return self._videoFilename is not None def enterFrame(self): """Capture the next frame, if any.""" # But first, check that any previous frame was exited. assert not self._enteredFrame, \ 'previous enterFrame() had no matching exitFrame()' if self._capture is not None: self._enteredFrame = self._capture.grab() def exitFrame(self): """Draw to the window. Write to files. Release the frame.""" # Check whether any grabbed frame is retrievable. # The getter may retrieve and cache the frame. if self.frame is None: self._enteredFrame = False return # Update the FPS estimate and related variables. if self._framesElapsed == 0: self._startTime = time.perf_counter() else: timeElapsed = time.perf_counter() - self._startTime self._fpsEstimate = self._framesElapsed / timeElapsed self._framesElapsed += 1 # Draw to the window, if any. if self.previewWindowManager is not None: if self.shouldMirrorPreview: mirroredFrame = numpy.fliplr(self._frame) self.previewWindowManager.show(mirroredFrame) else: self.previewWindowManager.show(self._frame) # Write to the image file, if any. if self.isWritingImage: cv2.imwrite(self._imageFilename, self._frame) self._imageFilename = None # Write to the video file, if any. self._writeVideoFrame() # Release the frame. self._frame = None self._enteredFrame = False def writeImage(self, filename): """Write the next exited frame to an image file.""" self._imageFilename = filename def startWritingVideo( self, filename, encoding = cv2.VideoWriter_fourcc('M','J','P','G')): """Start writing exited frames to a video file.""" self._videoFilename = filename self._videoEncoding = encoding def stopWritingVideo(self): """Stop writing exited frames to a video file.""" self._videoFilename = None self._videoEncoding = None self._videoWriter = None def _writeVideoFrame(self): if not self.isWritingVideo: return if self._videoWriter is None: fps = self._capture.get(cv2.CAP_PROP_FPS) if numpy.isnan(fps) or fps <= 0.0: # The capture's FPS is unknown so use an estimate. if self._framesElapsed < 20: # Wait until more frames elapse so that the # estimate is more stable. return else: fps = self._fpsEstimate size = (int(self._capture.get( cv2.CAP_PROP_FRAME_WIDTH)), int(self._capture.get( cv2.CAP_PROP_FRAME_HEIGHT))) self._videoWriter = cv2.VideoWriter( self._videoFilename, self._videoEncoding, fps, size) self._videoWriter.write(self._frame) class WindowManager(object): def __init__(self, windowName, keypressCallback = None): self.keypressCallback = keypressCallback self._windowName = windowName self._isWindowCreated = False @property def isWindowCreated(self): return self._isWindowCreated def createWindow(self): cv2.namedWindow(self._windowName) self._isWindowCreated = True def show(self, frame): cv2.imshow(self._windowName, frame) def destroyWindow(self): cv2.destroyWindow(self._windowName) self._isWindowCreated = False def processEvents(self): keycode = cv2.waitKey(1) if self.keypressCallback is not None and keycode != -1: self.keypressCallback(keycode) - Source code cameo.py

import cv2 from managers import WindowManager, CaptureManager class Cameo(object): def __init__(self): self._windowManager = WindowManager('Cameo', self.onKeypress) self._captureManager = CaptureManager( cv2.VideoCapture(0), self._windowManager, True) def run(self): """Run the main loop.""" self._windowManager.createWindow() while self._windowManager.isWindowCreated: self._captureManager.enterFrame() frame = self._captureManager.frame if frame is not None: # TODO: Filter the frame (Chapter 3). pass self._captureManager.exitFrame() self._windowManager.processEvents() def onKeypress(self, keycode): """Handle a keypress. space -> Take a screenshot. tab -> Start/stop recording a screencast. escape -> Quit. """ if keycode == 32: # space self._captureManager.writeImage('screenshot.png') elif keycode == 9: # tab if not self._captureManager.isWritingVideo: self._captureManager.startWritingVideo( 'screencast.avi') else: self._captureManager.stopWritingVideo() elif keycode == 27: # escape self._windowManager.destroyWindow() if __name__=="__main__": Cameo().run() - Tampilan hasil

- Source code lengkap

Source code lengkap dapat dilihat di https://github.com/PacktPublishing/Learning-OpenCV-4-Computer-Vision-with-Python-Third-Edition/tree/master/chapter02/cameo .

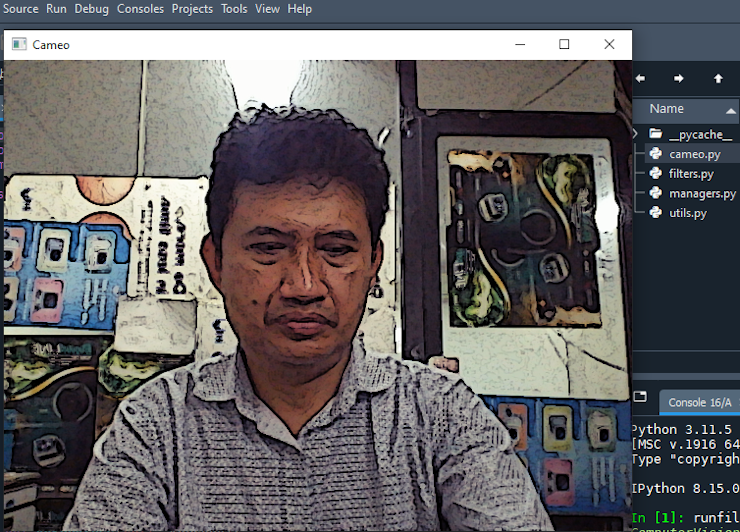

Tampilan webcam dengan realtime edge detection menggunakan OpenCV adalah seperti berikut ini

- Tambahan filters.py

import cv2 import numpy import utils def recolorRC(src, dst): """Simulate conversion from BGR to RC (red, cyan). The source and destination images must both be in BGR format. Blues and greens are replaced with cyans. The effect is similar to Technicolor Process 2 (used in early color movies) and CGA Palette 3 (used in early color PCs). Pseudocode: dst.b = dst.g = 0.5 * (src.b + src.g) dst.r = src.r """ b, g, r = cv2.split(src) cv2.addWeighted(b, 0.5, g, 0.5, 0, b) cv2.merge((b, b, r), dst) def recolorRGV(src, dst): """Simulate conversion from BGR to RGV (red, green, value). The source and destination images must both be in BGR format. Blues are desaturated. The effect is similar to Technicolor Process 1 (used in early color movies). Pseudocode: dst.b = min(src.b, src.g, src.r) dst.g = src.g dst.r = src.r """ b, g, r = cv2.split(src) cv2.min(b, g, b) cv2.min(b, r, b) cv2.merge((b, g, r), dst) def recolorCMV(src, dst): """Simulate conversion from BGR to CMV (cyan, magenta, value). The source and destination images must both be in BGR format. Yellows are desaturated. The effect is similar to CGA Palette 1 (used in early color PCs). Pseudocode: dst.b = max(src.b, src.g, src.r) dst.g = src.g dst.r = src.r """ b, g, r = cv2.split(src) cv2.max(b, g, b) cv2.max(b, r, b) cv2.merge((b, g, r), dst) def blend(foregroundSrc, backgroundSrc, dst, alphaMask): # Calculate the normalized alpha mask. maxAlpha = numpy.iinfo(alphaMask.dtype).max normalizedAlphaMask = (1.0 / maxAlpha) * alphaMask # Calculate the normalized inverse alpha mask. normalizedInverseAlphaMask = \ numpy.ones_like(normalizedAlphaMask) normalizedInverseAlphaMask[:] = \ normalizedInverseAlphaMask - normalizedAlphaMask # Split the channels from the sources. foregroundChannels = cv2.split(foregroundSrc) backgroundChannels = cv2.split(backgroundSrc) # Blend each channel. numChannels = len(foregroundChannels) i = 0 while i < numChannels: backgroundChannels[i][:] = \ normalizedAlphaMask * foregroundChannels[i] + \ normalizedInverseAlphaMask * backgroundChannels[i] i += 1 # Merge the blended channels into the destination. cv2.merge(backgroundChannels, dst) def strokeEdges(src, dst, blurKsize=7, edgeKsize=5): if blurKsize >= 3: blurredSrc = cv2.medianBlur(src, blurKsize) graySrc = cv2.cvtColor(blurredSrc, cv2.COLOR_BGR2GRAY) else: graySrc = cv2.cvtColor(src, cv2.COLOR_BGR2GRAY) cv2.Laplacian(graySrc, cv2.CV_8U, graySrc, ksize=edgeKsize) normalizedInverseAlpha = (1.0 / 255) * (255 - graySrc) channels = cv2.split(src) for channel in channels: channel[:] = channel * normalizedInverseAlpha cv2.merge(channels, dst) class VFuncFilter(object): """A filter that applies a function to V (or all of BGR).""" def __init__(self, vFunc=None, dtype=numpy.uint8): length = numpy.iinfo(dtype).max + 1 self._vLookupArray = utils.createLookupArray(vFunc, length) def apply(self, src, dst): """Apply the filter with a BGR or gray source/destination.""" srcFlatView = numpy.ravel(src) dstFlatView = numpy.ravel(dst) utils.applyLookupArray(self._vLookupArray, srcFlatView, dstFlatView) class VCurveFilter(VFuncFilter): """A filter that applies a curve to V (or all of BGR).""" def __init__(self, vPoints, dtype=numpy.uint8): VFuncFilter.__init__(self, utils.createCurveFunc(vPoints), dtype) class BGRFuncFilter(object): """A filter that applies different functions to each of BGR.""" def __init__(self, vFunc=None, bFunc=None, gFunc=None, rFunc=None, dtype=numpy.uint8): length = numpy.iinfo(dtype).max + 1 self._bLookupArray = utils.createLookupArray( utils.createCompositeFunc(bFunc, vFunc), length) self._gLookupArray = utils.createLookupArray( utils.createCompositeFunc(gFunc, vFunc), length) self._rLookupArray = utils.createLookupArray( utils.createCompositeFunc(rFunc, vFunc), length) def apply(self, src, dst): """Apply the filter with a BGR source/destination.""" b, g, r = cv2.split(src) utils.applyLookupArray(self._bLookupArray, b, b) utils.applyLookupArray(self._gLookupArray, g, g) utils.applyLookupArray(self._rLookupArray, r, r) cv2.merge([b, g, r], dst) class BGRCurveFilter(BGRFuncFilter): """A filter that applies different curves to each of BGR.""" def __init__(self, vPoints=None, bPoints=None, gPoints=None, rPoints=None, dtype=numpy.uint8): BGRFuncFilter.__init__(self, utils.createCurveFunc(vPoints), utils.createCurveFunc(bPoints), utils.createCurveFunc(gPoints), utils.createCurveFunc(rPoints), dtype) class BGRCrossProcessCurveFilter(BGRCurveFilter): """A filter that applies cross-process-like curves to BGR.""" def __init__(self, dtype=numpy.uint8): BGRCurveFilter.__init__( self, bPoints=[(0,20),(255,235)], gPoints=[(0,0),(56,39),(208,226),(255,255)], rPoints=[(0,0),(56,22),(211,255),(255,255)], dtype=dtype) class BGRPortraCurveFilter(BGRCurveFilter): """A filter that applies Portra-like curves to BGR.""" def __init__(self, dtype=numpy.uint8): BGRCurveFilter.__init__( self, vPoints=[(0,0),(23,20),(157,173),(255,255)], bPoints=[(0,0),(41,46),(231,228),(255,255)], gPoints=[(0,0),(52,47),(189,196),(255,255)], rPoints=[(0,0),(69,69),(213,218),(255,255)], dtype=dtype) class BGRProviaCurveFilter(BGRCurveFilter): """A filter that applies Provia-like curves to BGR.""" def __init__(self, dtype=numpy.uint8): BGRCurveFilter.__init__( self, bPoints=[(0,0),(35,25),(205,227),(255,255)], gPoints=[(0,0),(27,21),(196,207),(255,255)], rPoints=[(0,0),(59,54),(202,210),(255,255)], dtype=dtype) class BGRVelviaCurveFilter(BGRCurveFilter): """A filter that applies Velvia-like curves to BGR.""" def __init__(self, dtype=numpy.uint8): BGRCurveFilter.__init__( self, vPoints=[(0,0),(128,118),(221,215),(255,255)], bPoints=[(0,0),(25,21),(122,153),(165,206),(255,255)], gPoints=[(0,0),(25,21),(95,102),(181,208),(255,255)], rPoints=[(0,0),(41,28),(183,209),(255,255)], dtype=dtype) class VConvolutionFilter(object): """A filter that applies a convolution to V (or all of BGR).""" def __init__(self, kernel): self._kernel = kernel def apply(self, src, dst): """Apply the filter with a BGR or gray source/destination.""" cv2.filter2D(src, -1, self._kernel, dst) class BlurFilter(VConvolutionFilter): """A blur filter with a 2-pixel radius.""" def __init__(self): kernel = numpy.array([[0.04, 0.04, 0.04, 0.04, 0.04], [0.04, 0.04, 0.04, 0.04, 0.04], [0.04, 0.04, 0.04, 0.04, 0.04], [0.04, 0.04, 0.04, 0.04, 0.04], [0.04, 0.04, 0.04, 0.04, 0.04]]) VConvolutionFilter.__init__(self, kernel) class SharpenFilter(VConvolutionFilter): """A sharpen filter with a 1-pixel radius.""" def __init__(self): kernel = numpy.array([[-1, -1, -1], [-1, 9, -1], [-1, -1, -1]]) VConvolutionFilter.__init__(self, kernel) class FindEdgesFilter(VConvolutionFilter): """An edge-finding filter with a 1-pixel radius.""" def __init__(self): kernel = numpy.array([[-1, -1, -1], [-1, 8, -1], [-1, -1, -1]]) VConvolutionFilter.__init__(self, kernel) class EmbossFilter(VConvolutionFilter): """An emboss filter with a 1-pixel radius.""" def __init__(self): kernel = numpy.array([[-2, -1, 0], [-1, 1, 1], [ 0, 1, 2]]) VConvolutionFilter.__init__(self, kernel)

- Tambahan utils.py

import cv2 import numpy import scipy.interpolate def createLookupArray(func, length=256): """Return a lookup for whole-number inputs to a function. The lookup values are clamped to [0, length - 1]. """ if func is None: return None lookupArray = numpy.empty(length) i = 0 while i < length: func_i = func(i) lookupArray[i] = min(max(0, func_i), length - 1) i += 1 return lookupArray def applyLookupArray(lookupArray, src, dst): """Map a source to a destination using a lookup.""" if lookupArray is None: return dst[:] = lookupArray[src] def createCurveFunc(points): """Return a function derived from control points.""" if points is None: return None numPoints = len(points) if numPoints < 2: return None xs, ys = zip(*points) if numPoints < 3: kind = 'linear' elif numPoints < 4: kind = 'quadratic' else: kind = 'cubic' return scipy.interpolate.interp1d(xs, ys, kind, bounds_error = False) def createCompositeFunc(func0, func1): """Return a composite of two functions.""" if func0 is None: return func1 if func1 is None: return func0 return lambda x: func0(func1(x))

- Pengubahan source code cameo.py

import cv2 import filters from managers import WindowManager, CaptureManager class Cameo(object): def __init__(self): self._windowManager = WindowManager('Cameo', self.onKeypress) self._captureManager = CaptureManager( cv2.VideoCapture(0), self._windowManager, True) self._curveFilter = filters.BGRPortraCurveFilter() def run(self): """Run the main loop.""" self._windowManager.createWindow() while self._windowManager.isWindowCreated: self._captureManager.enterFrame() frame = self._captureManager.frame if frame is not None: filters.strokeEdges(frame, frame) self._curveFilter.apply(frame, frame) self._captureManager.exitFrame() self._windowManager.processEvents() def onKeypress(self, keycode): """Handle a keypress. space -> Take a screenshot. tab -> Start/stop recording a screencast. escape -> Quit. """ if keycode == 32: # space self._captureManager.writeImage('screenshot.png') elif keycode == 9: # tab if not self._captureManager.isWritingVideo: self._captureManager.startWritingVideo( 'screencast.avi') else: self._captureManager.stopWritingVideo() elif keycode == 27: # escape self._windowManager.destroyWindow() if __name__=="__main__": Cameo().run()Pada bagian ini perhatikan bagian self._curveFilter

def __init__(self): self._windowManager = WindowManager('Cameo', self.onKeypress) self._captureManager = CaptureManager( cv2.VideoCapture(0), self._windowManager, True) self._curveFilter = filters.BGRPortraCurveFilter()dan if frame is not None

def run(self): """Run the main loop.""" self._windowManager.createWindow() while self._windowManager.isWindowCreated: self._captureManager.enterFrame() frame = self._captureManager.frame if frame is not None: filters.strokeEdges(frame, frame) self._curveFilter.apply(frame, frame)

- Tampilan hasil

- Source lengkap

Source lengkap dapat dilihat di https://github.com/PacktPublishing/Learning-OpenCV-4-Computer-Vision-with-Python-Third-Edition/tree/master/chapter03/cameo .

Kunjungi www.proweb.co.id untuk menambah wawasan anda.

Real Time Edge Detection dengan OpenCV